Debugging real-time systems is difficult. The real world happens while you’re trying to understand what the CPU is thinking in a moment. Interrupts happen. Data arrives. Buffers empty and need refilling. The simple act of stepping the CPU through its process changes the process.

What if you could slow the real world down too and spend 15 minutes inside a microsecond?

Traditionally, real time systems set timers that tick every so many microseconds or milliseconds. Traditionally, hardware spins at a set rate (eg disk drives rotating at 300ish revs per minute) and serial lines pass data at a set number of bits per second (eg 300 baud, 9600 baud or a gigabit). If the CPU is out of action – for debugging – when something happens, then the usual response doesn’t occur, the system doesn’t behave in its usual manner and whatever you’re debugging isn’t really the system you think you’re testing.

With z80sim and Cromix, I ran into timing issues. Cromix is interrupt driven and it expects things to happen at certain times. If you mess up the timing then deadlocks occur. In my case, at one point, I messed up the timing for a UART Transmit Buffer Empty signal and the output buffer would fill and never empty. The system would produce some output and then hang. I’d introduced delays in the wrong places and it all fell apart.

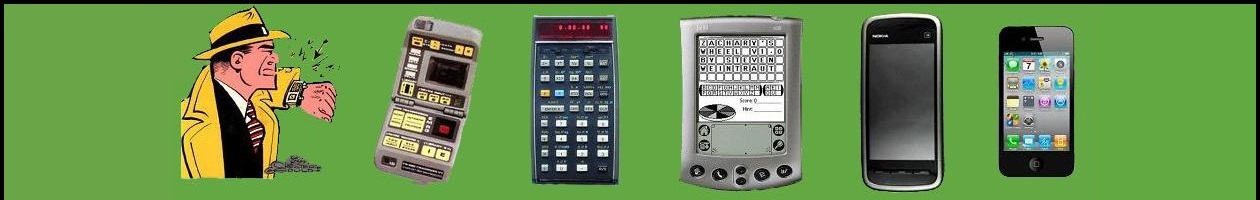

Now, whilst the correct action should have been to better match what goes on with a real Cromix system, I was trying to introduce something that isn’t in a real Cromix system. The problem with emulators or simulators is that they normally spend much of their life in tight loops doing nothing. That’s the ‘wait for keypress’ loop (or for a mag card programmable calculator, ‘wait for keypress or card present or W/PRGM-RUN switch changed’ loop). It uses a lot of the host CPU, just checking that nothing is happening. That’s not much of an issue for a desktop simulator; but it is a problem for one on a mobile phone. The impact on battery life is significant.

Emulators for phones usually have some means of slowing the wait loop down, or freeing up host CPU cycles, when nothing is happening. This should leave the emulator responsive when things DO happen, but reduce the battery consumption when they don’t.

On a desktop system, what you see instead is the % CPU usage at 100 constantly (or 100/cores if you have multiple cores). There’s not a battery to affect (unless you’re on a laptop) but in many cases, the system never sleeps.

I wanted to slow z80sim down if nothing was happening. Perhaps that should be: no user program loaded and running, no disk drive accesses, nothing being sent to the console or other serial lines, and no keypresses.

The distinction between a user program and an operating system is hard to make for an emulator. As far as it’s concerned, they’re both just programs.

It is possible to add code into hardware emulations for disk drives, the console and serial lines for ‘have I been busy recently?’ but, in practice, checking for recent keyboard activity is usually sufficient. If no-one’s pressed anything for the last 15 minutes, they’re probably not there. That is wrong in some cases, I agree, but it does work for most cases.

Slowing a system down when it has events triggering every millisecond or so presents pitfalls. I was there. I fell into them.

My solution was to take the timing code and, for most of it, tie that to CPU T-states. A CPU is clocked. It does certain things at certain times and all of that is driven by the CPU’s clock circuit. CPUs execute machine instructions. How long it takes to execute depends on the instruction. For a Z80 CPU this might be 4-20 or more T-states, where a T-state is, from what I can tell, one cycle of the CPU clock.

A 4 MHz Z80 CPU T-state is 250 nanoseconds long (1/4000000 seconds). Instructions take a microsecond or more.

If you slow the CPU down it won’t be able to execute as many instructions in a millisecond as it did previously. If the instructions to check for some condition (Transmit Buffer Empty, Read Data Available, Disk Index pulse present, etc) take longer to run than how often the condition ocurrs, you’ll have problems such as overruns or a disk needing to go around again. Depending on how the operating system code is written, you may never notice the condition.

Tying the hardware timing to the CPU clock means you can slow down ‘the universe’ while you debug the CPU. Instead of a disk spinning at 300 RPM, it spins at 300 RPM for a 4MHz CPU clock. At 400 KHz or 4Hz it spins at 30 RPM or 0.0003 RPM. The world slows down while the CPU sleeps or you debug the program.

It works the other way too. If your simulation can run the CPU at 20 MHz (5 times faster than the real thing was), your hardware speeds up too. The performance of your disk drives and serial lines improves with that of the CPU. That does lead to an issue though. Some things are meant to be real-time. Like the real-time clock that Cromix uses. It’s not a modern device with battery backed up CMOS that you read the time from. This was the 1980s. If you don’t make some effort to read the realtime clock twice a second (512 mS) your clock will lose time and never recover (until you next boot Cromix and/or reset the system time).

If you’re debugging something and do spend 15 minutes in a microsecond, your real-time clock is going to lose time. Aside from hardware or a different approach by Cromix itself, that can’t be helped. However, if the CPU has slowed because you’ve had things to sort out in the meantime, the system seems to still cope with updating real world time twice a second.

Instead of timers being driven by host OS interrupts every X milliseconds / microseconds / nanoseconds; my Windows build of z80sim drives them after a set number of T-states. Hardware state updates occur between CPU instructions and as the CPU speeds up or slows down, the hardware keeps in step. It’s pretty simple and it seems to work really well. It does allow spending 15 minutes in a microsecond if you need to, before moving on to the next instruction. Time passes differently in the CPU’s universe when compared to outside the system.

The code (in sim0.c ver 0.08 or later) looks like:

void cpu_z80(void)

{

...

int ..., tsTimer=0, ms=0, hibernate=0; // gss

time_t now, lastActivity;

lastActivity= time(NULL);

do {

...

states = (*op_sim[memrdr(PC++)]) (); /* execute next opcode */

...

// gss: 1mS timer tied to tstates

tsTimer += states;

if (tsTimer >= 4000) { // If we were running at 4MHz, this'd be 1mS

tsTimer -= 4000;

ts1ms(); // tell the world

if (++ms == 10000) { // 10 sec, ts

now= time(NULL);

hibernate= 0;

if (getActivityFlag()) lastActivity= now;

if (now-lastActivity > 15*60) hibernate=1; // 15 mins real-time

ms= 0;

}

if (hibernate) {

if (getActivityFlag()) { hibernate=0; lastActivity=time(NULL); }

os_sleep(20); // every ts 1mS, wait 20 mS rt.

}

}

...

} while (cpu_state == CONTIN_RUN);

...

We assume the Z80 CPU was intended to run at 4 MHz, in that era. 1 millisecond happens every 4000 clock cycles or T-states.

The CPU is already counting T-states so we tap into that with our own tsTimer variable. If a machine instruction takes us to or over the 4000 point, we call a ts1ms() function which weaves its way through all of the IO device software so that IO is syncronized to this one common timer.

We also wait for 10,000 of those (every 10 seconds worth of 4 MHz T-states) before checking for activity and getting the outside world time (from the windows/host operating system). If there hasn’t been any activity for 15 real-time minutes we set the hibernate flag.

If we hibernate, we wait for 20 real milliseconds every 1 T-state milliseconds. This drops CPU usage to 5% (or less if the host is running the Z80 faster than 4 MHz).

Whilst in hibernate mode, we check more frequently for activity. We don’t want to have to wait 10 seconds for the simulator to wake up. Instead we check every 21-ish milliseconds (1 T-state millisecond + 20 real-time milliseconds).

If you step through the operating system code, the T-state count increases at the same rate that you work your way through the instructions. A T-state-based 1 mS timer is going to fire at exactly the same point in the code that it would have triggered if you weren’t debugging. Disks slow down with you. UARTs / serial lines slow down with you. Even the hibernation process slows down with you – it will still hibernate; but you can spend 15 minutes on a single instruction if you have to.

Obviously, there’s a bit more to it:

– the ts1ms() function driving device / IO ticks

– the getActivityFlag() function seeing if something is happening or not.

I think both are fairly generic and should be usable for / connectable to any(?) device. Feel free to look through the code in cromemco-iosim.c and others.

You can find the source code for version 0.08 of the Windows build of z80sim at cpm/z80sim.

Using a T-state-based 1 mS timer ensures everything stays in sync, even if I speed up or slow down the CPU. Importantly, it seems to have solved all of the timing issues I was getting with Cromix (Running Cromix on Windows) when I tried to reduce CPU usage when it was idle.

This is part of the CP/M topic.